Is banning TikTok the right approach?

There's been a lot of news recently about various national governments issuing diktats banning the use of TikTok on government issued devices, for which there are some hark backs and echoes of the decisions surrounding Huawei - much of which coming across as the implicit assumption that you can't trust technology from China because the Chinese State could get access to the data being processed by it.

It falls into the category of risk management, that I often refer to as the "boogey man of the internet", a title that often changes hands between Russia and China, with the implicit assumption that:

Anything associated to that country is inherently suspicious and risky.

They operate with a level of sophistication that is unmatched and often undetectable.

This is also often presented with the notion that there is no public evidence to prove the fact, so you'll just have to take someone’s word for it.

Having prevented, investigated, and responded to nation state attacks for the lion’s share of my career - partly the reason why I've been asked to be part of RUSI's State Threats Task Force - I can speak on reasonably good authority that there is at least some basis to the assumptions.

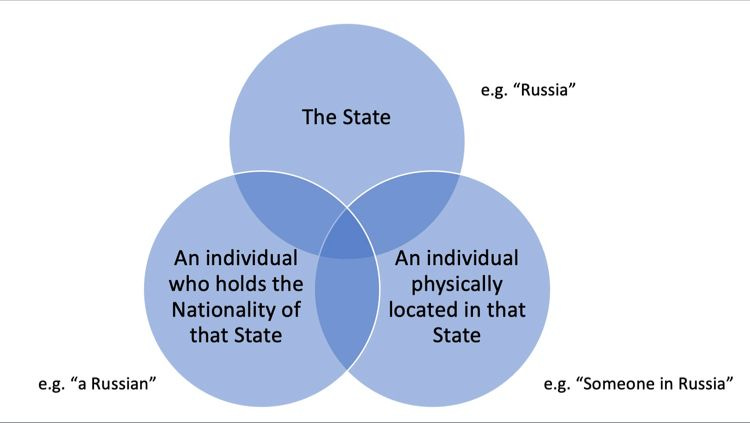

However, the conversation is always at risk of descending into unfounded xenophobia, so it’s always worth remembering the following graphic when it comes to attribution - the circles overlap, but only in parts:

But fundamentally, the risk of *you* being targeted by nation state is dependent on several factors, including (for example) the country and industry sector you operate in, who's in your supply chain and likewise, whose supply chain you are in - with the notable exception to certain operations that involve higher risks of collateral damage, for example, NotPetya.

In other words, not every organisation's risk profile is the same - the threats that are likely to target them will vary, and the inherent impact of a breach will vary from organisation to organisation. The difficulty is that if you *think* that you may be a target of nation state, there often is not a lot of information in the public domain with which to make an informed decision, and so often the resulting response is to kneejerk block/ban.

For something like TikTok, that may not be massively impactful - but as demonstrated by some of the early decision making in the UK by the National Cyber Security Centre (including this great explainer by then Technical Director, Dr Ian Levy) on the topic of Huawei, a more pragmatic approach may sometimes be needed.

So is the approach to ban TikTok just common sense, a knee jerk reaction, or is there a pragmatic alternative solution?

Some principles to consider:

We're talking about a ban on installing and using TikTok on a corporate device, one owned by the employer and not the end-user. On that basis, I'm actually fairly comfortable that they should have a say on what gets installed. I imagine there are plenty of other apps that are also not allowed to be installed, ranging from gambling and gaming apps to those with more mature/adult content. Restricting what you can and can't install, or even what websites you can access from a corporate device, is, in itself, common place.

Pretty much all mobile applications will collect information about the device it is installed on. Some may have access to GPS, the camera roll and contacts, and many will also collect in-app activity such as the content that you consume or the searches and queries you conduct. This will be available to the App developers, as well as potentially to the developers of any 3rd party components such as advertising agencies. Various national legislation exists to regulate the handling and management of personal data (e.g. GDPR) - but many operate on the principle that as long as the subject/user is informed and approves the intended use, then organisations have somewhat free rein. After all, how many people read the PAGES of Terms and Conditions and fully understand what exactly is included or hidden behind complicated "legalese" phrases. Ultimately, with any service you're using:

"If the product is free, you are the product”

The act of banning something which the user-base wants, will not stop them from doing it - they'll just do it on a personal device instead (or worse, find a backdoor workaround that further compromises the security of the device they're using). That may be enough to just simply offload the risk elsewhere - but the concern may well resurface, should the boundary between work and personal life become blurry. A good example is where corporate emails are accessed from a personal device (like that senior leader that wants to use their iPad for everything), or even when there is "official use" of TikTok by public facing officials and comms/PR/marketing teams.

Finally, a slightly tongue-in-cheek suggestion might be to recognise that absolute privacy whilst also posting on social media is largely contradictory, although I accept that it's not completely impossible to achieve some degree of pseudo-anonymity.

A somewhat undiscussed concern may lie less with the security of the App itself, and more the content that is served, and the power of a 3rd party (in this case, the Chinese State) to influence it. I’d argue that this is nothing new, and it’s worth considering some of the cases presented in this anthology here.

Ultimately, we cannot completely eliminate risks from an enterprise, but we can reduce the risk to a tolerable level. With approaches such as “defence in depth”, multiple mechanisms may be needed to both address different elements of the risk, but also provide overlapping coverage should one element fail.

For TikTok, I have no problem with the approach that bans it from enterprise devices - in the examples we’re talking about, it is after all a Government-owned device and they should have the authority to say what they are and aren’t comfortable with. For non-Government entities, you’ll need to determine your own risk appetite - but arguably doing it in tandem with a review of 3rd party applications and the use of social media by staff more generally. The latter of these may include providing practical advice (or at least referring staff to authoritative guidance such as that from the UK’s NCSC) on how to use Social Media in a more secure or privacy conscious way.